Large Language Models (LLMs) are rapidly evolving, with new, more powerful versions released frequently. However, accessing the best models often involves high subscription fees, multiple platforms, and fragmented tools. Enter Open Router, a groundbreaking unified interface that aggregates around a hundred LLMs from providers like OpenAI, Google, Meta, and others, offering you a seamless, no-subscription API gateway. You pay only for the tokens you consume, and benefit from the latest models on day one, all while maintaining greater privacy and control.

In this post, we will walk you through the essential features of Open Router, demonstrate how it revolutionizes LLM access and benchmarking, and explore how you can integrate it into your workflows or developer projects.

What is Open Router and Why Should You Use It?

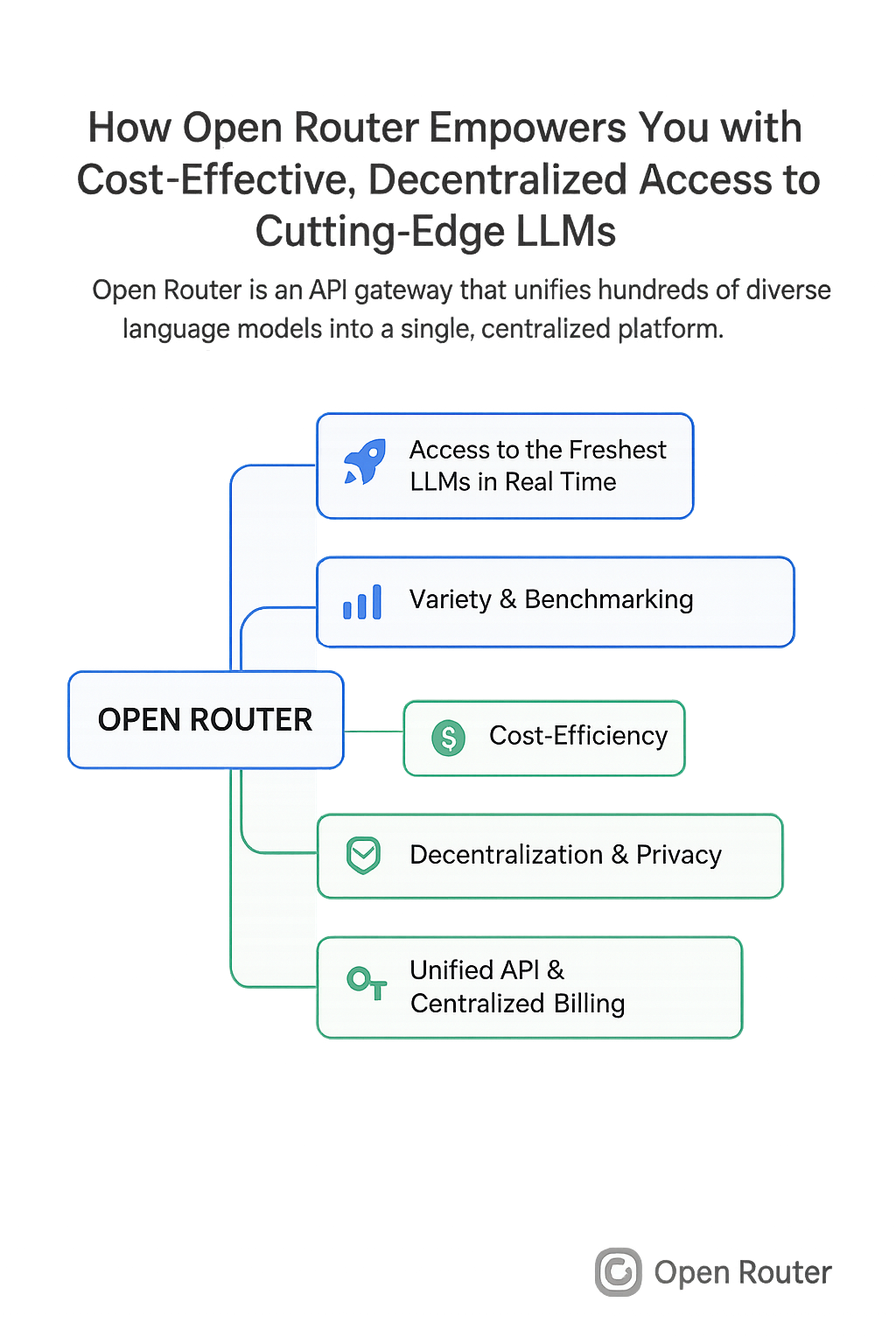

Open Router is an API gateway that unifies hundreds of diverse language models into a single, centralized platform. Unlike proprietary services such as ChatGPT or Google’s LLM offerings that require costly subscriptions, Open Router charges you solely based on usage, with flexible payment options — including crypto payments for added anonymity.

Key Benefits:

- Access to the freshest LLMs in real time: Models like OpenAI’s GPT-4.1 Pro, Mistral Magistral, and Jamini 2.5 Pro update on Open Router the day they launch.

- Variety & Benchmarking: Use multiple models simultaneously to benchmark performance, quality, pricing, and reasoning capacity.

- Cost-efficiency: Pay only for tokens you consume; no fixed monthly fees.

- Decentralization & Privacy: Options to create accounts without real identity verification and pay with cryptocurrency.

- Unified API & Centralized Billing: Access dozens of providers under one API key with consolidated billing and activity monitoring.

Getting Started with Open Router

Step 1: Create an Account

Visit the Open Router homepage to create your account. You have two choices:

- Register with real credentials and a payment method (credit card or crypto).

- Create an account anonymously with no real identity required, enabling greater privacy.

Upon signup, you might receive free starter credits to explore the platform.

Step 2: Exploring the Interface

After logging in, you’ll discover a dashboard showcasing:

- The latest and most popular models (e.g., Gemini 2.5 Pro, Cloud 4+, OpenAI O3 Pro).

- Applications and tools integrated with Open Router.

- Activity logs of token usage and API calls.

- Rankings of most-used models and their categories like programming or translation.

Step 3: Model Specifications at a Glance

Click any model to see vital technical details including:

- Context window size (number of tokens processed simultaneously).

- Cost per million input and output tokens.

- Provider information (OpenAI, Google, Dipsic, etc.).

- Usage statistics and performance rankings.

This real-time transparency removes guesswork and lets you pick the best model for your needs precisely.

How to Use Open Router for Chat and Benchmarking

Step 4: Create “Rooms” to Organize Your Work

Open Router uses a “room” concept akin to chat sessions or workspaces:

- Create as many rooms as you want to organize different projects or experimental setups.

- Assign different models to each room—select multiple models simultaneously (up to 10 or more).

- Customize each model’s parameters such as temperature (controls creativity vs determinism), sampling, and system prompts (defines the role or specialty of the model).

Step 5: Send Prompts and Compare Responses

Within a room, type your prompt once and receive answers from all selected models side by side. This setup lets you:

- Instantly compare reasoning styles and quality across American, Chinese, or various international models.

- Edit answers, copy selectively, or regenerate responses individually without disturbing others.

- Save chats, export conversations in Markdown or JSON formats for archiving or sharing.

- Import prior conversations to continue work or rerun benchmarks.

Step 6: Fine-Tune Model Behavior

- Adjust parameters like temperature from 0 (deterministic) to 1 (creative).

- Rename models in your room for easy identification, especially when running multiple variations.

- Manage providers — models may have multiple providers with varying speeds, reliability, and prices. Choose based on what matters for your task.

Step 7: Activate Web Search Augmentation

Open Router can integrate web searches to extend LLM knowledge with live internet data, enhancing responses with up-to-date information.

Advanced Use: Integrating Open Router with Automation Tools and Developer Environments

Step 8: API Keys and Developer Access

- Generate API keys in Open Router’s settings to use the platform in developer workflows.

- Easily connect to automation platforms like n8n, Make, or coding environments using Python, JavaScript, or curl commands.

- Benefit from simplified API documentation designed for fast integration.

Step 9: Sample Python Script for Chat Completion

Here’s a basic example to get you started using Python with Open Router’s API:

import requests

API_KEY = "your_api_key_here"

headers = {

"Authorization": f"Bearer {API_KEY}",

"Content-Type": "application/json"

}

data = {

"model": "openai-o3-pro",

"messages": [{"role": "user", "content": "Hello, how are you?"}]

}

response = requests.post("https://api.openrouter.ai/v1/chat/completions", headers=headers, json=data)

print(response.json())

This script sends a prompt to the API and prints the model’s response. Adjust the model name and prompt per your needs.

Step 10: Automate and Scale

- Set credit limits to control spending.

- Monitor activity logs for detailed cost and usage insights.

- Use exported JSON data for training, analysis, or generating summary tables.

- Build workflows that dynamically select the best model based on your criteria—price, speed, or reasoning style.

Why Benchmarking Multiple Language Models Matters

Open Router shines because it empowers unbiased, multi-model benchmarking. Asking a single model may lead to biased or incomplete answers. Testing diverse models side by side:

- Reveals alternative viewpoints or reasoning.

- Highlights performance variances based on model architecture or provider.

- Prevents over-reliance or manipulation by any single AI.

With Open Router’s efficient token-based pricing, running such evaluations is affordable and practical, even at scale.

Conclusion

Open Router is a game-changing tool that democratizes access to elite LLMs by consolidating hundreds of models into a unified, affordable API gateway. It offers unmatched flexibility in testing, comparing, and deploying language models across projects, while respecting your privacy and budget. Whether you’re a beginner exploring chat interfaces or a developer automating complex AI agents, Open Router provides a robust and transparent platform.

🔍 Discover Kaptan Data Solutions — your partner for medical-physics data science & QA!

We're a French startup dedicated to building innovative web applications for medical physics, and quality assurance (QA).

Our mission: provide hospitals, cancer centers and dosimetry labs with powerful, intuitive and compliant tools that streamline beam-data acquisition, analysis and reporting.

🌐 Explore all our medical-physics services and tech updates

💻 Test our ready-to-use QA dashboards online

Our expertise covers:

🔬 Patient-specific dosimetry and image QA (EPID, portal dosimetry)

📈 Statistical Process Control (SPC) & anomaly detection for beam data

🤖 Automated QA workflows with n8n + AI agents (predictive maintenance)

📑 DICOM-RT / HL7 compliant reporting and audit trails

Leveraging advanced Python analytics and n8n orchestration, we help physicists automate routine QA, detect drifts early and generate regulatory-ready PDFs in one click.

Ready to boost treatment quality and uptime? Let’s discuss your linac challenges and design a tailor-made solution!

Comments