The AI landscape often presents a deceptive reality: while 90% of AI agents dazzle in demonstrations, they frequently falter when confronted with real users and complex scenarios. The issues are recurrent—edge cases, scalability problems, and unpredictable prompts. This common pitfall highlights a critical challenge: transitioning from a captivating prototype to a truly reliable, production-grade system. This article outlines a five-step roadmap to overcome this fragility, emphasizing engineering rigor and continuous improvement.

The Problem: Why Do AI Agents Often Break in Production?

The primary reason for the widespread failure of AI agents outside controlled lab environments is a lack of engineering rigor. Without a robust foundation, failure is almost guaranteed. The roadmap presented tackles this fragility head-on, starting with fundamental stability.

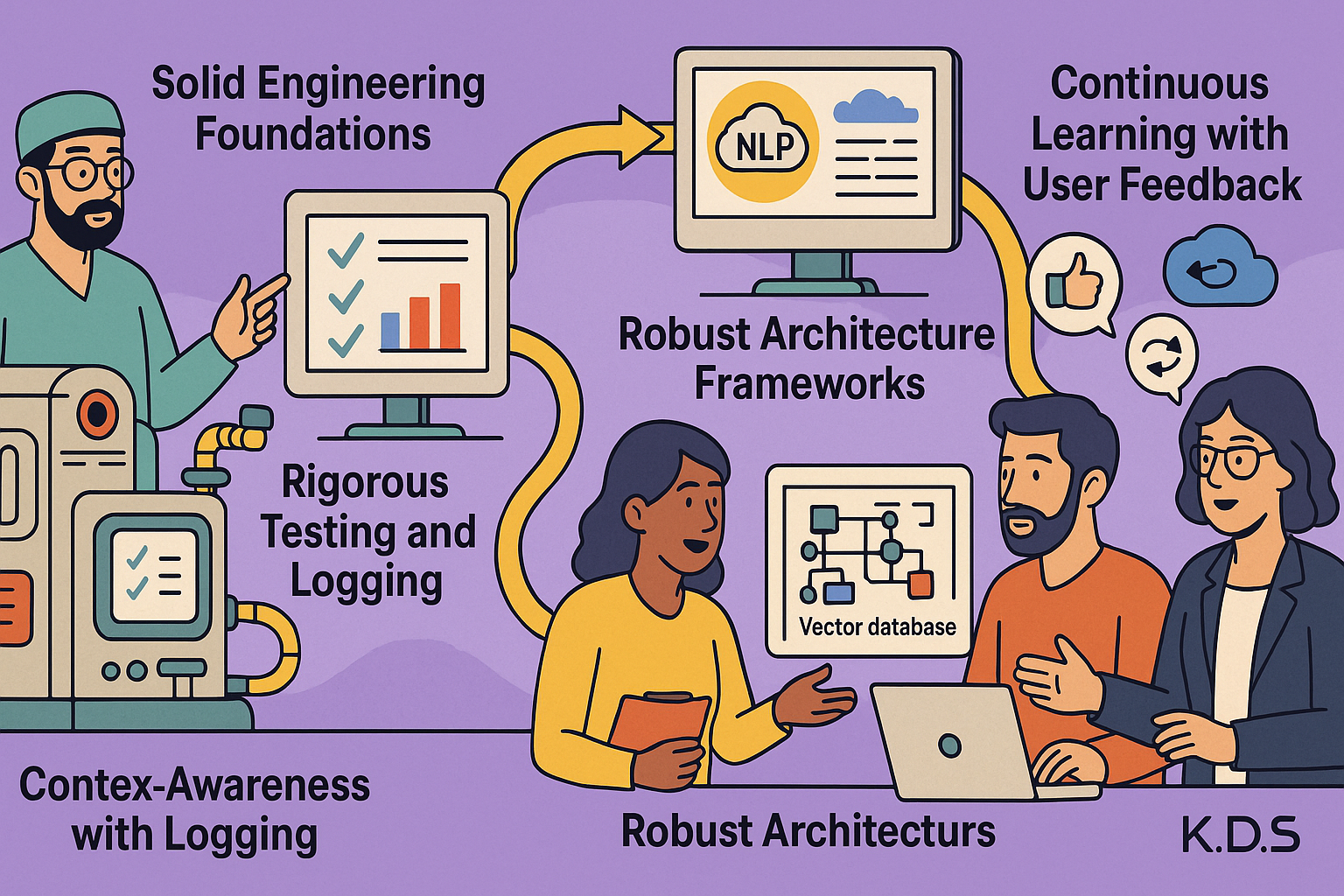

The 5-Step Roadmap to Reliable AI Agents

Step 1: Establish a Solid Technical Foundation (Stability)

The first step, while seemingly basic, is crucial: building a strong Python base for production. This involves leveraging specific tools and practices:

- FastAPI for Web APIs: This framework enables the creation of high-performance web APIs, essential for real-time interactions with AI agents.

- Asynchronous Programming: Implementing

asyncioprevents blocking operations, ensuring the agent remains responsive even under heavy load. - Pydantic for Data Validation: Pydantic ensures that all incoming and outgoing data conforms to expected formats, significantly reducing “stupid errors” caused by malformed inputs or outputs. This step structures the code, creating a solid foundation.

Step 2: Implement Robust Verification and Testing

Once the foundation is laid, it’s vital to verify that the agent performs as expected and doesn’t “go off the rails.” This involves two key practices:

- Comprehensive Logging: Logging provides “X-ray vision” into the agent’s internal workings. It allows developers to understand the flow of execution and diagnose what went wrong, especially during failures.

- Systematic Testing:

- Unit Tests: These are designed to catch small, immediate bugs in individual components.

- Integration Tests: These verify that the entire workflow functions correctly, ensuring all integrated parts communicate and perform as intended.

This transition from a “tinkering” mode to a production mindset is critical; as one expert noted, testing is what separates prototype thinking from production thinking.

Step 3: Enhance Contextual Awareness with Retrieval Augmented Generation (RAG)

A significant limitation of many AI agents is their reliance solely on pre-trained knowledge, leading to a lack of current or specific context. RAG addresses this by allowing the agent to fetch fresh, relevant information before generating a response.

- External Knowledge Bases: The agent actively searches external databases for pertinent information.

- Embeddings and Vector Databases: This involves mastering embeddings (numerical representations of meaning) and using vector databases (or even PostgreSQL for simpler cases) for efficient information retrieval.

- Document Chunking: Intelligent splitting of documents (chunking) is crucial to ensure relevant content is retrieved effectively.

- Relevance Evaluation: It’s essential to assess whether the retrieved information is truly pertinent.

This contextual deficiency often explains the 90% failure rate; RAG transforms the system from merely processing a prompt to a more reasoning and search-enabled entity.

Step 4: Develop a Robust Architecture

A truly robust AI agent is more than just a sequence of API calls; it’s a sophisticated system that manages internal states and controls flow.

- State Management: The agent must remember previous interactions and maintain a coherent conversation.

- Control Rules: Implementing rules helps the agent decide what actions to take.

- Frameworks for AI Agents: Frameworks like LangChain or AgentOS are invaluable. They assist in managing capabilities, routing, memory, and even communication between different agents, effectively serving as the indispensable backbone of the system.

Step 5: Implement Continuous Learning and Improvement

Launching the agent is merely the beginning. Continuous monitoring and learning in production are vital for ongoing reliability and enhancement.

- Production Monitoring: Tools like Langfuse or integrated functions within AgentOS allow real-time tracking of user interactions. This helps identify points of friction where users struggle or where the agent makes mistakes.

- Feedback Loop: Critically, this feedback is channeled back into the development cycle. Prompts can be refined, tools and RAG knowledge bases updated, and newly discovered edge cases addressed.

This iterative cycle is never truly finished, ensuring the agent constantly adapts and improves.

The Human Element: User Experience (UX)

Beyond technical robustness, a crucial dimension of reliability highlighted by experts is user experience. A technically perfect agent can still fail if the interaction is poor.

- Graceful Handling of Misunderstanding: How does the agent react when it doesn’t understand? Does it assist the user or leave them in limbo?

- Ambiguity and Off-Topic Questions: The agent must manage ambiguous inputs or off-topic questions without appearing unintelligent.

True robustness emerges from the fusion of solid engineering (testing, logging, RAG, architecture) and a UX design that anticipates frictions and provides elegant exits when interactions become complex.

Conclusion

To escape the trap of the 90% failure rate, building reliable AI agents requires a blend of technical rigor, contextual intelligence through RAG, continuous monitoring, and thoughtful UX design. The challenge isn’t creating a momentarily impressive demo but rather constructing and maintaining a reliable, adaptable, and learning system. This demands a fundamental shift in mindset from prototyping to production-grade engineering.

The next frontier lies in not just making agents reliable but truly trustworthy, especially when facing complex and unpredictable human interactions.

🔍 Discover Kaptan Data Solutions — your partner for medical-physics data science & QA!

We're a French startup dedicated to building innovative web applications for medical physics, and quality assurance (QA).

Our mission: provide hospitals, cancer centers and dosimetry labs with powerful, intuitive and compliant tools that streamline beam-data acquisition, analysis and reporting.

🌐 Explore all our medical-physics services and tech updates

💻 Test our ready-to-use QA dashboards online

Our expertise covers:

🔬 Patient-specific dosimetry and image QA (EPID, portal dosimetry)

📈 Statistical Process Control (SPC) & anomaly detection for beam data

🤖 Automated QA workflows with n8n + AI agents (predictive maintenance)

📑 DICOM-RT / HL7 compliant reporting and audit trails

Leveraging advanced Python analytics and n8n orchestration, we help physicists automate routine QA, detect drifts early and generate regulatory-ready PDFs in one click.

Ready to boost treatment quality and uptime? Let’s discuss your linac challenges and design a tailor-made solution!

Get in touch to discuss your specific requirements and discover how our tailor-made solutions can help you unlock the value of your data, make informed decisions, and boost operational performance!

Comments