In the rapidly evolving world of artificial intelligence and AI agents, one term is gaining significant traction: MCP. While many are discussing it, fewer truly grasp what it is, its purpose, and how to harness its immense power. This article aims to demystify the Model Context Protocol (MCP), explain its revolutionary impact, and show you how to leverage it in your daily life, starting from scratch with concrete examples.

Understanding the Limitations of Traditional LLMs

Before diving into MCP, let’s understand why it’s so crucial. When you interact with large language models (LLMs) like ChatGPT or Claude through a conversational interface, you type a prompt, and the AI responds with text. At its core, an LLM is designed to predict the next most probable word based on vast amounts of text data. It excels at generating coherent and contextually relevant text.

However, LLMs face a significant limitation: they can only communicate via text. When asked to perform an action, such as “Can you send an email to shubham@harma.fr?”, an LLM will typically respond that it cannot because it’s not connected to the Gmail service. This highlights a fundamental challenge: LLMs, by themselves, cannot directly interact with external applications or execute actions in the real world.

The traditional solution involved Application Programming Interfaces (APIs). Many applications, like Gmail, Notion, and various web services, offer APIs that allow them to connect with other applications. One might wonder why LLM developers haven’t simply created connectors for every possible API. The answer lies in the sheer complexity and lack of standardization.

Every application’s API is unique. Integrating them requires significant effort from LLM developers to understand each API’s specific structure and build a custom connector. This “N x M” problem means that for every LLM and every application, a unique integration must be built. These integrations are costly, slow to develop, and prone to breaking whenever an API undergoes an update. This scenario created a race among LLM providers to build as many integrations as possible, a task that proved incredibly difficult.

The Rise of the Model Context Protocol (MCP)

Recognizing this critical bottleneck, Anthropic, the team behind the Claude LLM, conceived a revolutionary idea: instead of relying on disparate and non-standardized APIs, create a universal standard for communication between LLMs and applications. This new standard is called the Model Context Protocol (MCP).

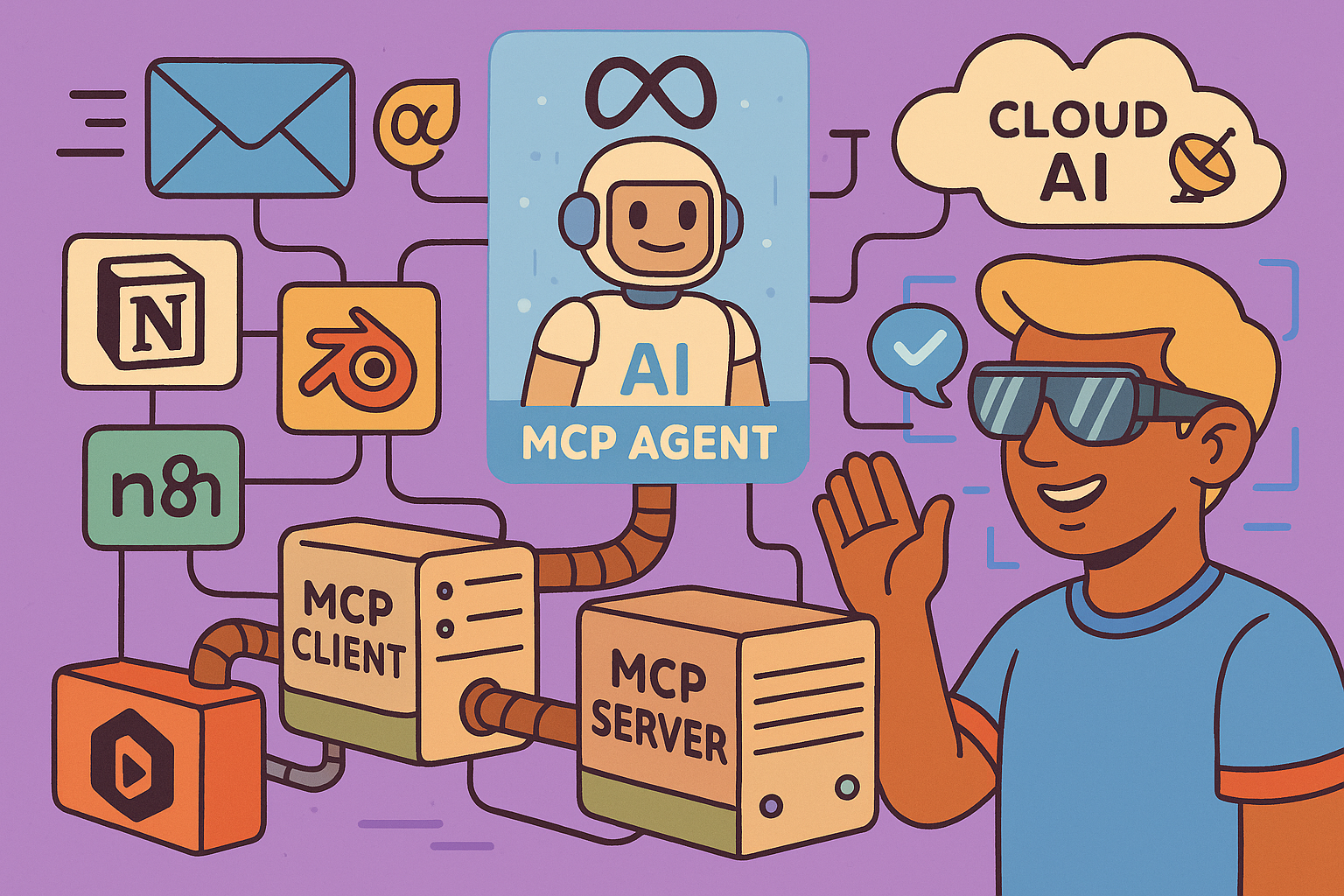

Think of MCP as the USB-C of AI integrations. Just as USB-C provides a single, standardized port for various devices, MCP provides a unified language for applications to communicate with LLMs and AI agents. This means that applications are no longer solely responsible for creating unique APIs, nor are LLMs burdened with building countless custom connectors. Instead, applications leverage an MCP server, and LLMs or AI agents use an MCP client. If both adhere to the MCP standard, they can communicate seamlessly.

This standardization simplifies connectivity for both sides. Applications now only need to implement the MCP standard to expose their functionalities, and LLMs/AI agents can connect to any MCP-compliant application with a single, unified approach.

MCP in Action: Concrete Demonstrations

Let’s explore how MCP translates into tangible capabilities:

1. Notion and Claude Integration

Notion, a popular workspace tool, recently released its MCP server. This allows LLMs like Claude to directly interact with Notion, enabling unprecedented levels of automation.

How it works

- Connect Notion to Claude: Within Notion’s connection settings, you can link your Notion workspace to Claude. This establishes the MCP connection.

- Request page creation: You can then instruct Claude, “Can you create a page about MCP in my private Notion space?”

- Automated content generation: Claude will not only understand your request but also execute it. It will create a new private page in Notion, complete with structured text, headings, and even pre-populated content about MCP.

- Dynamic database creation: The power goes further. You can ask Claude to “create a database on this page with all known MCP servers.” Claude will then generate a fully functional Notion database, including appropriate columns (properties) and statuses, without you needing to manually define them. It understands Notion’s internal structure and properties through the MCP server.

This demonstrates how MCP transforms an LLM from a mere conversational partner into an active co-worker capable of performing complex, multi-step actions within an application.

2. Automating Workflows with n8n

n8n is a powerful open-source automation tool that allows users to build complex workflows without extensive coding. Traditionally, automating tasks with n8n involved manually configuring nodes and understanding API connections. With MCP, this process becomes much more intuitive.

How it works

- Instruct Claude to build a workflow: You can tell Claude, “I want an automation on n8n that publishes a YouTube video to Twitter, sends an email via Gmail, adds it to an Airtable database, and sends an SMS via WhatsApp, immediately after the video is published on my channel.”

- Claude generates the n8n workflow: Claude, leveraging an unofficial n8n MCP, will then generate the skeletal structure of this complex workflow within your n8n instance. While some credentials might still need to be configured, the core logic and interconnected nodes are automatically laid out.

- Advanced workflow creation: Claude’s understanding extends to more complex n8n functionalities, such as the “code” node, which typically requires developer knowledge. For example, you could ask for an automation that categorizes incoming emails using a GPT module, drafts a reply, and adds it as a sub-message. Claude can generate this intricate workflow, including the specific prompts for the GPT module.

This illustrates how MCP empowers LLMs to abstract away the technical complexities of automation tools, enabling users to build sophisticated workflows purely through natural language commands.

3. 3D Design in Blender

MCP isn’t limited to web-based applications. It extends to desktop software as well, like the 3D modeling suite Blender.

How it works

- Connect Claude to Blender: Using a Blender MCP, Claude can be given the ability to interact with the software.

- Generate 3D models from text and images: You can then ask Claude to “create a basketball in Blender” or “create a sandcastle in Blender based on this image.”

- Automated 3D object creation: Claude will then instruct Blender to generate the requested object, including meshes, textures, and even intricate details like brick patterns for a sandcastle. This happens without manual intervention, showcasing the profound impact of MCP on creative workflows.

These examples highlight MCP’s ability to turn natural language instructions into concrete actions across diverse software environments, from productivity tools to complex creative applications.

The Client-Server Architecture of MCP

To grasp how MCP functions, it’s essential to understand its client-server architecture:

- MCP Server: Every application that wants to be MCP-compliant needs to expose an MCP server. This server’s role is to list all the functionalities it offers (e.g., “create page,” “create database,” “move file”), the types of resources it manages, and the accepted format for instructions (prompts).

- MCP Client: LLMs and AI agents act as MCP clients. When a client needs to interact with an application, it queries the application’s MCP server to understand what actions are possible and how to request them.

This standardized communication protocol ensures that clients and servers “speak the same language,” regardless of the underlying application’s complexity. MCP servers can be hosted online (like Notion), locally on a user’s machine (like Blender), or on a private server (like a self-hosted n8n instance). Regardless of their location, they communicate uniformly via MCP.

The Future of AI Agents and MCP

MCP fundamentally reshapes the landscape of AI agents. Previously, AI agents needed to be explicitly taught how to use each tool and its unique API. With MCP, this process is dramatically simplified.

Imagine an AI agent with a single “MCP client” node. This node can connect to any MCP-compliant application, eliminating the need for separate integrations for every service. For instance, an agent could directly interact with your bank account if the bank offers an MCP server, allowing it to retrieve statements or make transactions based on your instructions, without complex API configurations.

This decentralized approach means that anyone can create an MCP server for their product. In the future, everything from refrigerators to coffee machines could have an MCP server, allowing AI agents to interact with them seamlessly.

MCP is not just a technical upgrade; it’s the new backbone and “hands” of AI agents. It’s what allows LLMs to move beyond mere conversation and truly perform actions in our digital and physical worlds. This isn’t a distant future; it’s the present reality, rapidly accelerating and becoming more accessible.

🔍 Discover Kaptan Data Solutions — your partner for medical-physics data science & QA!

We're a French startup dedicated to building innovative web applications for medical physics, and quality assurance (QA).

Our mission: provide hospitals, cancer centers and dosimetry labs with powerful, intuitive and compliant tools that streamline beam-data acquisition, analysis and reporting.

🌐 Explore all our medical-physics services and tech updates

💻 Test our ready-to-use QA dashboards online

Our expertise covers:

🔬 Patient-specific dosimetry and image QA (EPID, portal dosimetry)

📈 Statistical Process Control (SPC) & anomaly detection for beam data

🤖 Automated QA workflows with n8n + AI agents (predictive maintenance)

📑 DICOM-RT / HL7 compliant reporting and audit trails

Leveraging advanced Python analytics and n8n orchestration, we help physicists automate routine QA, detect drifts early and generate regulatory-ready PDFs in one click.

Ready to boost treatment quality and uptime? Let’s discuss your linac challenges and design a tailor-made solution!

Get in touch to discuss your specific requirements and discover how our tailor-made solutions can help you unlock the value of your data, make informed decisions, and boost operational performance!

Comments