OpenAI has officially launched Sora 2, their latest video generation model, making it freely available to the public. This marks a significant move after months of relative silence since the initial Sora demo in February 2024, and a somewhat underwhelming official release of Sora 1 last December. With Sora 2, OpenAI aims for a spectacular comeback, positioning it as their “GPT 3.5 moment” for video. This article explores why Sora 2 is generating so much buzz and what it could mean for the future of content creation.

The Journey to Sora 2: From Hype to Reality

In February 2024, OpenAI first showcased Sora with a captivating demo of a woman walking through neon-lit Tokyo streets. The fluid motion, the reflections on wet pavement, and the intricate details immediately captured the tech world’s imagination. However, the subsequent release of Sora 1 in December proved disappointing, with limitations such as teleporting objects, bizarre character deformations, and inconsistent physics. During this period, Google’s Vo3 quietly gained market share, putting OpenAI at a perceived disadvantage.

Now, Sora 2 arrives not as a mere patch but as a transformative update designed to overcome these previous hurdles and revolutionize AI video generation.

What Makes Sora 2 a Game-Changer

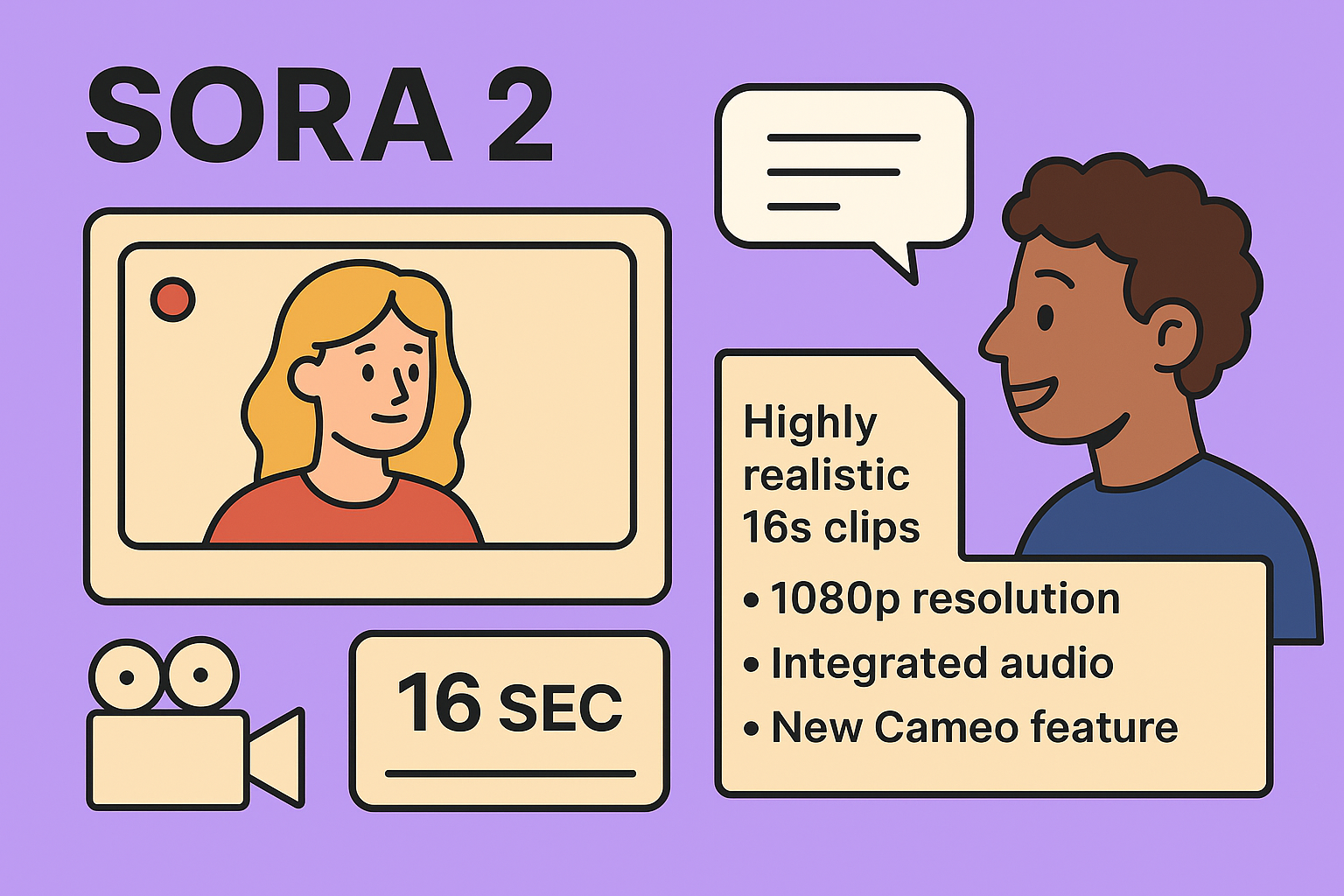

1. Extended Video Length and High Resolution

Sora 2 can generate 16 seconds of video at once, double the 8-second limit of Vo3. This extended duration significantly enhances narrative capabilities, allowing for more coherent storytelling with a clear beginning, middle, and end, rather than just short, looped clips. The model also supports native 1080p resolution, with an optional 4K output for professional users.

2. Integrated Audio Generation

A groundbreaking feature for OpenAI’s video models, Sora 2 directly integrates audio. Generated videos now come with synchronized dialogues, sound effects, and even background music. This means users no longer need to rely on third-party tools for audio integration, drastically simplifying the production workflow. This capability is demonstrated by examples such as a “digital Shakespeare” rapping with perfect lip-sync.

3. Enhanced Understanding of Physics and the World

OpenAI emphasizes Sora 2’s improved understanding of physics and real-world interactions. Previous models often distorted reality to fulfill prompts, leading to unrealistic outcomes (e.g., a basketball magically entering the hoop despite a missed shot). Sora 2, however, can simulate realistic failures. If a basketball player misses a shot, the ball will credibly bounce off the backboard. This subtle but crucial improvement allows for the creation of more believable and immersive simulated worlds.

Examples include gymnasts performing fluid movements on a balance beam, with anatomically consistent bodies and the beam reacting realistically to weight and displacement. Another example shows backflips on a floating plank, where the plank realistically deforms under weight and the water reacts to the movement. Even the classic Dalmatian demo from the original Sora shows noticeable improvements, with natural movements, proper paw interaction with the ground, and logical shadows. While a slight artificiality may still be present, it’s becoming increasingly difficult to spot without close inspection.

4. Improved Narrative Coherence and Persistence

Sora 2 is designed to better understand and execute complex narrative instructions. It can maintain consistency of characters, attire, faces, and environments across multiple shots within a sequence. This persistence was a major weakness in Sora 1, where characters and objects often changed subtly or disappeared randomly between cuts. Sora 2 effectively addresses this “Achilles’ heel.” The model particularly excels in two stylistic categories: realistic cinematic footage and anime-style animation.

5. “Cameo” Feature: Personalizing AI Creations

The standout feature of Sora 2 is “Cameo,” which allows users to insert themselves into AI-generated videos. By recording a short video following a specific script, the system captures the user’s face, voice, and expressions. This personalized digital avatar can then appear in any Sora 2 scene, allowing users to imagine themselves surfing giant waves or interacting with mythological creatures.

Sora 2 also aims to clone voice, enabling Cameo avatars to speak with the user’s vocal timbre. OpenAI has implemented three levels of privacy for Cameos: private (only the user can use their Cameo), restricted (specific individuals can be authorized), and public (anyone on the platform can use the user’s Cameo). Security measures include identity verification with facial recognition and randomized reading scripts to prevent unauthorized cloning of appearances.

Distribution, Pricing, and Guardrails

Mobile-First Strategy and Community Building

Sora 2 is initially launching as an iOS mobile application, bypassing a full web version for now, although updates to sora.com are anticipated. The app’s interface resembles TikTok, featuring a vertical feed of 10-second video clips. However, unlike TikTok, each clip is an AI generation. Users can create their own content from text prompts or remix existing shared content by changing styles, adding music, or inserting their Cameos. OpenAI is clearly building a social network around AI content creation.

Access is currently invitation-only, with priority given to former intense Sora 1 users, followed by ChatGPT Pro subscribers, then Plus and Team subscribers, and finally the general public. This exclusive, progressive rollout aims to generate buzz, though it may frustrate those eager to immediately test the platform. This strategy contrasts with Meta’s recent “Vibes” launch, which was met with negative feedback for its “soulless synthetic content.” OpenAI hopes to foster collaborative creation rather than passive consumption.

Free at Launch with Tiered Access

Sora 2 will be free at launch with generous usage limits, though these are subject to computational constraints. ChatGPT Pro subscribers ($200/month) gain access to Sora 2 Pro, an experimental version with fewer restrictions and potentially better quality. Additional fees might apply during periods of high demand. An API for developers is also in the pipeline.

Strict Safety and Copyright Measures

OpenAI has implemented tight security guardrails. Prompts will undergo strict filtering, with content barriers gradually relaxed once the system is confirmed to not produce problematic content. All generated videos will include CDPA metadata proving their artificial origin and a visible watermark on downloads.

Regarding copyright, OpenAI’s approach has generated controversy in Hollywood, though specifics are not detailed.

Sora 2 vs. the Competition (Vo3)

While Google’s Vo3 excels in pure cinematic quality with 4K rendering and refined native audio (including dialogue, special effects, and integrated music) for short projects, Sora 2 prioritizes duration and narrative flexibility. The 16-second duration versus Vo3’s 8 seconds is a significant advantage for comprehensive storytelling.

Sora 2 also benefits from seamless integration into the ChatGPT ecosystem and distribution to its 500 million weekly users, offering a massive adoption advantage that Vo3 currently lacks, despite its potential technical superiority.

Remaining Limitations and Future Implications

Despite the advancements, Sora 2 still has limitations. Physics can be imperfect in complex edge cases, dynamic camera movements can sometimes destabilize scene coherence, and interactions between multiple objects or characters may exhibit subtle glitches. The artificial “sheen” distinguishing AI-generated videos from real footage, while diminishing, is still present. Sora 2 represents a major step forward, but not a final destination.

The impact on content creation is profound. These tools drastically lower the barrier to entry for producing professional-quality videos. Anyone with a smartphone and an idea can now create clips that would have required a full production team just two years ago. This democratization opens massive opportunities for individual creators and small businesses but also risks market saturation with varying quality synthetic content. Standing out will become increasingly challenging.

The skills that truly matter are evolving. While AI handles video creation, human capabilities in conceptualizing original, emotionally resonant ideas will be paramount. Mastering prompt engineering, developing a keen aesthetic and narrative sense, and creatively combining multiple tools will be crucial. The rapid evolution of AI means Sora 2 will likely be obsolete in six months, underscoring the need for continuous learning and adaptation. Understanding how these tools work, their strengths, weaknesses, and how to integrate them into productive workflows will differentiate those who thrive from those who fall behind.

OpenAI has re-entered the race with considerable force. Sora 2, though not perfect, crosses the critical threshold of real usability for the general public. Its 16-second duration, native audio, improved physics, and free, accessible application distribution are game-changers. This battle in AI video generation is just beginning, and fierce competition will undoubtedly lead to even more powerful tools for users.

🔍 Discover Kaptan Data Solutions — your partner for medical-physics data science & QA!

We're a French startup dedicated to building innovative web applications for medical physics, and quality assurance (QA).

Our mission: provide hospitals, cancer centers and dosimetry labs with powerful, intuitive and compliant tools that streamline beam-data acquisition, analysis and reporting.

🌐 Explore all our medical-physics services and tech updates

💻 Test our ready-to-use QA dashboards online

Our expertise covers:

🔬 Patient-specific dosimetry and image QA (EPID, portal dosimetry)

📈 Statistical Process Control (SPC) & anomaly detection for beam data

🤖 Automated QA workflows with n8n + AI agents (predictive maintenance)

📑 DICOM-RT / HL7 compliant reporting and audit trails

Leveraging advanced Python analytics and n8n orchestration, we help physicists automate routine QA, detect drifts early and generate regulatory-ready PDFs in one click.

Ready to boost treatment quality and uptime? Let’s discuss your linac challenges and design a tailor-made solution!

Get in touch to discuss your specific requirements and discover how our tailor-made solutions can help you unlock the value of your data, make informed decisions, and boost operational performance!

Comments