The field of Artificial Intelligence is on the cusp of another revolution, thanks to groundbreaking research from Meta’s team, led by the renowned Yann LeCun. Their latest paper, “Learning Latent Action World Models in the Wild,” introduces a paradigm shift in how AI understands and interacts with the physical world. This research posits that AI can comprehend physics and the consequences of its actions autonomously, without explicit human annotation. This advancement is deemed a crucial step towards achieving Artificial General Intelligence (AGI), enabling AI to transition from virtual environments to real-world applications within the next five years.

The Core Problem: The Bottleneck of Action Annotation

Current AI systems face a significant hurdle: the immense cost and time involved in annotating millions of actions within video data. Imagine trying to label every steering wheel angle and accelerator pressure in a driving video, or the exact coordinates of a dancer’s limbs. This manual annotation is simply not scalable. Datasets like YouTube Temporal 1B, with over a billion video clips, and HowTo100M with 136 million clips, are largely unannotated regarding actions, presenting a vast, unstructured trove of information.

Previous approaches to address this relied on simplified “world models” – AI systems that predict future events. While these have shown promise in controlled environments like simple video games, they struggle with the complexity and unpredictability of real-world scenarios. Without explicit action information, predictions become vague, control impossible, and planning futile. Discrete actions (like “move left,” “jump”) are too rigid for nuanced natural videos.

The Revolutionary Solution: Latent Action World Models

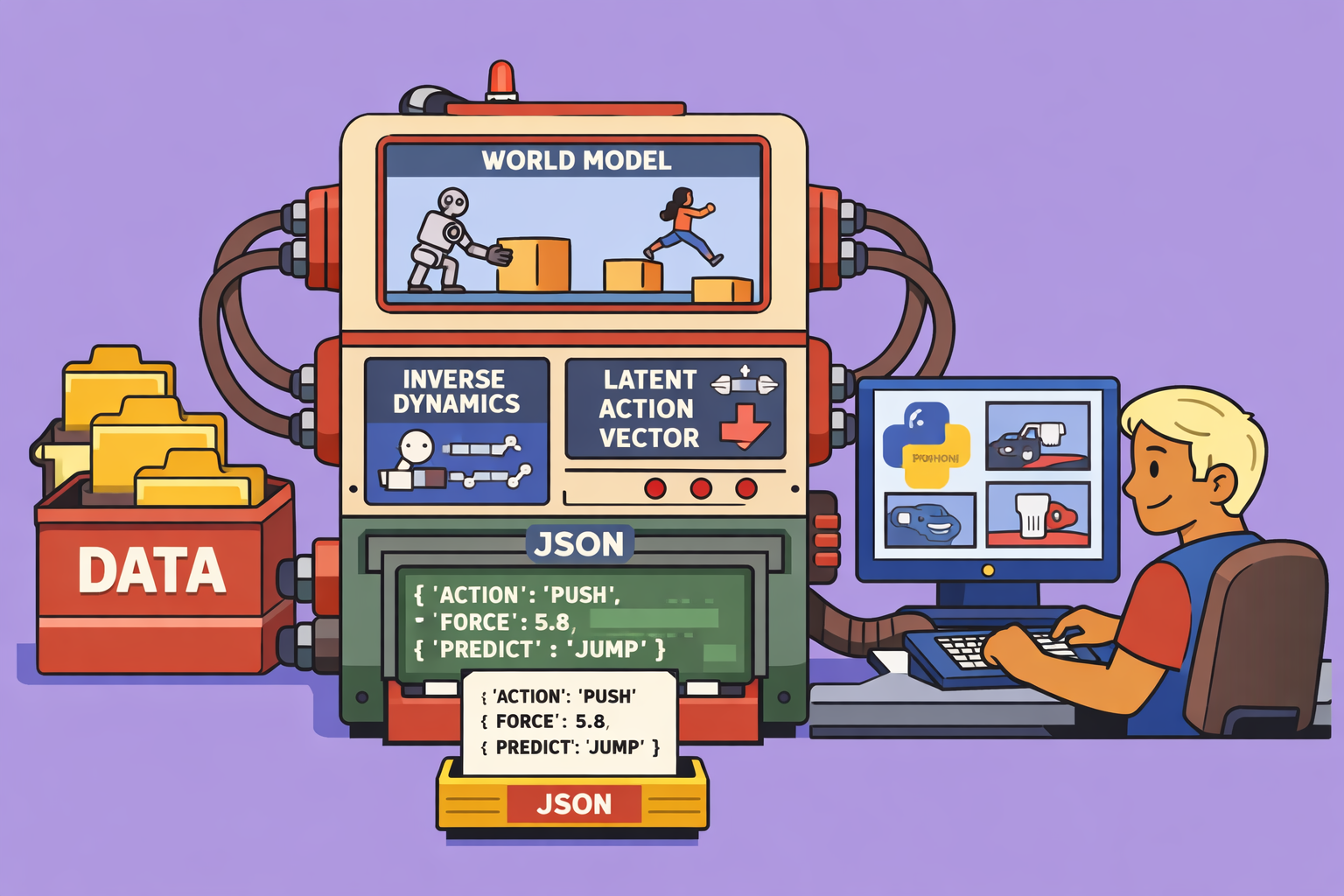

This new paper introduces a revolutionary approach: learning to model actions automatically from unannotated YouTube videos. The key innovation lies in Latent Actions.

What are Latent Actions?

A latent action is an action that is not directly observed but is inferred from observations. Think of it like a detective arriving at a crime scene: they didn’t witness the event but can deduce what happened by observing displaced objects. Similarly, an AI system can infer the underlying actions causing changes between two video frames.

The system employs two core components:

- Inverse Dynamics Model (IDM) – The Detective: This component observes a past state and a future state (two consecutive video frames) and infers the latent action that must have occurred to cause the change.

- Forward Model (WM) – The Oracle: This component takes a past state and a latent action as input, and then predicts the future state.

These two models are trained jointly. The IDM tries to guess the action that transforms frame A into frame B, and the Forward Model then tries to predict frame B using frame A and the IDM’s guessed action. The errors from both are used to refine the models, a process akin to a chef constantly iterating on a recipe and cooking process to get the perfect dish.

Preventing “Cheating” – Regularization Techniques

A critical challenge is to prevent the IDM from “cheating” by simply encoding the entire next frame into the latent action, rather than truly understanding the underlying action. To combat this, three regularization methods are employed:

- Sparsity: This forces latent actions to have minimal non-zero components, meaning only a few key dimensions are active at any given time. It’s like conveying maximum meaning with minimum words.

- Noisy: Adding Gaussian noise to latent actions and then forcing them towards a standard Gaussian distribution. This makes the model more robust by training it in noisy conditions, similar to understanding speech in a bustling café.

- Quantization: Limiting latent actions to a discrete “codebook” of possibilities, much like reducing millions of colors to a named palette of 16.

The research indicates that Noise and Sparsity perform best for “in the wild” videos, while quantization struggles with the complexity of real-world actions.

Structure of Latent Actions: 128 Dimensions of Understanding

Latent actions are represented as 128-dimensional vectors, where each dimension controls a specific aspect of change. For instance, one dimension might control horizontal movement, another vertical force, and so on. The model autonomously learns what each of these 128 “sliders” controls – a vastly more nuanced representation than simple “left,” “right,” or “jump.”

Architecture and Training: Unleashing AI on YouTube

The complete system leverages a frozen vision encoder, VQGAN2-L, pre-trained on millions of images by Meta. This encoder compresses visual data into compact feature vectors. The World Model itself is a large Vision Transformer (ViT-L) with 300 million parameters, adapted for images and videos.

The training dataset is immense: YouTube Temporal 1B, comprising approximately one billion video clips. This translates to an astounding 140 years of continuous video data, all without human annotation. The model processes 16 frames per clip at 4 FPS, in batches of 1024 clips, over 30,000 iterations – equivalent to viewing 31 million clips and 490 million frames.

In the Wild Training: The Challenges of Unstructured Data

Training “in the wild” on YouTube videos presents unique challenges:

- No Common Embodiment: Videos feature humans, animals, and objects, making it difficult to define a universal “action.”

- Environmental Noise: Unintentional movements like rustling leaves need to be distinguished from deliberate actions.

- Infinite Diversity: The sheer variety of actions in natural videos is limitless.

Crucially, the model learns the spatial localization of actions. Since there’s no common “body,” actions are learned relative to their position in the camera frame. An action like “move left at position X” can apply to a person, a ball, or any object at that specific location. This universal applicability is key—the model doesn’t differentiate between object types for a given movement.

Action Transfer and Cyclic Consistency

A powerful validation of this approach is action transfer. If latent actions truly represent understanding, they should be transferable. The system can extract an action (e.g., “moving left”) from one video (e.g., a person walking left) and apply it to a completely different video (e.g., a ball rolling left).

The concept of cyclic consistency further verifies this:

- Extraction: An action (Z_original) is extracted from Video A (e.g., a man walking).

- Application: The action is applied to Video B (e.g., a flying ball), resulting in a “walking ball.”

- Re-extraction: The action is re-extracted from this new “walking ball” video (Z_reextracted).

- Verification: If Z_original is equal to Z_reextracted, it confirms the model truly understands the action, independent of the object performing it.

Tests show remarkable results: the error difference between the original and re-extracted action is minimal (5-30%), indicating that the model generalizes rather than just memorizing. It genuinely understands “moving left” as a concept applicable to anything.

Applications to Robotics: A Near-Future Reality

Perhaps the most exciting implication is for robotics. The World Model, trained on YouTube, can be used to control real robots. A “controller” translates real robotic actions (e.g., “move arm 5cm forward”) into the latent actions understood by the World Model. This means robots can learn complex manipulation tasks by simply observing human actions on YouTube, without direct programming or extensive supervised training.

Performance on robotic manipulation tasks (using the Franka Emika Panda arm) shows that a World Model trained only on YouTube, without ever seeing a robot during training, can achieve performance comparable to specialized models directly trained on robotic data. This is a monumental leap.

Using a planning algorithm called the Cross-Entropy Method (CEM), the World Model can plan optimal sequences of actions: generating random sequences, evaluating them in simulation, selecting the best ones, and iterating to converge on the most efficient action plan.

The Future: AGI within Reach

This research brings us closer to AGI.

- Short-term (1-2 years): Improved video generation models, better robotic simulators, and significantly reduced annotation costs for video data.

- Mid-term (3-5 years): Robots learning directly from YouTube, AI assistants understanding the physical world, and hyper-realistic physics in video games.

- Long-term (5-10 years): This is a foundational step towards AGI—AI systems capable of reasoning about the physical world, possessing a holistic understanding of the cosmos, and operating autonomously in unstructured environments.

The implications are profound. As Yann LeCun eloquently states, “To build intelligent systems capable of reasoning and planning in the real world, we must build systems capable of predicting the future.” This paper is a significant stride in that direction, heralding an era where AI moves beyond pattern recognition to genuine understanding and interaction with our physical reality. The world is about to change, and this research gives us a glimpse into how.

🔍 Discover Kaptan Data Solutions — your partner for medical-physics data science & QA!

We're a French startup dedicated to building innovative web applications for medical physics, and quality assurance (QA).

Our mission: provide hospitals, cancer centers and dosimetry labs with powerful, intuitive and compliant tools that streamline beam-data acquisition, analysis and reporting.

🌐 Explore all our medical-physics services and tech updates

💻 Test our ready-to-use QA dashboards online

Our expertise covers:

🔬 Patient-specific dosimetry and image QA (EPID, portal dosimetry)

📈 Statistical Process Control (SPC) & anomaly detection for beam data

🤖 Automated QA workflows with n8n + AI agents (predictive maintenance)

📑 DICOM-RT / HL7 compliant reporting and audit trails

Leveraging advanced Python analytics and n8n orchestration, we help physicists automate routine QA, detect drifts early and generate regulatory-ready PDFs in one click.

Ready to boost treatment quality and uptime? Let’s discuss your linac challenges and design a tailor-made solution!

Get in touch to discuss your specific requirements and discover how our tailor-made solutions can help you unlock the value of your data, make informed decisions, and boost operational performance!

Comments